A New Dawn for Artificial Intelligence Hardware

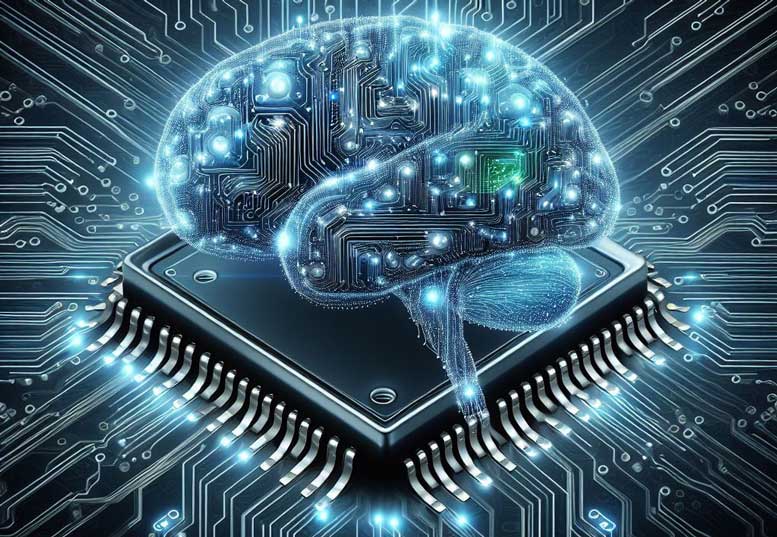

As artificial intelligence (AI) applications grow exponentially in both scale and complexity, traditional computing systems are struggling to keep up. Conventional CPUs and GPUs are designed based on the von Neumann architecture, which separates memory from processing units. This structure, although effective for general-purpose computing, poses significant limitations when it comes to simulating the massively parallel and adaptive behavior of the human brain.

This is where neuromorphic computing enters the picture — an innovative approach to designing computing systems that mimic the neural structures and functionalities of the human brain. By building systems inspired by biological neurons and synapses, neuromorphic computing promises to revolutionize the way machines learn, adapt, and process information in real time.

What is Neuromorphic Computing?

Neuromorphic computing is a cutting-edge field in computer engineering that aims to develop hardware and algorithms modeled after the human nervous system. The term “neuromorphic” was first coined in the 1980s by Carver Mead, who envisioned electronic systems that replicate the structure and function of biological neural networks.

In simple terms, neuromorphic computing attempts to build machines that “think” more like humans — using spiking neural networks (SNNs) instead of traditional artificial neural networks (ANNs). These systems process information via electrical pulses (spikes) in a manner similar to how neurons in the brain communicate.

Key Characteristics of Neuromorphic Systems

Neuromorphic chips differ significantly from traditional computing hardware. Some of their core features include:

A. Event-Driven Processing

Instead of processing information in sequential steps, neuromorphic systems operate in an event-driven manner. Computations are triggered only when relevant spikes are detected, reducing unnecessary operations.

B. Asynchronous Operation

Unlike CPUs that rely on a global clock signal, neuromorphic chips operate asynchronously, meaning each part of the system can process information independently.

C. Low Power Consumption

Due to their efficiency and biologically inspired design, neuromorphic systems consume significantly less power — making them ideal for edge devices and battery-powered systems.

D. High Parallelism

Neuromorphic architectures allow for massive parallel processing, akin to how the human brain operates with billions of neurons working simultaneously.

E. Adaptability and Learning

Using SNNs and local learning rules such as Spike-Timing Dependent Plasticity (STDP), neuromorphic systems can learn and adapt without constant supervision.

How Neuromorphic Computing Mimics the Brain

To understand how neuromorphic computing mimics biological brains, we must examine how real neurons and synapses function.

In the human brain:

-

Neurons communicate via electrochemical spikes.

-

Synapses adjust their connection strength based on experience (plasticity).

-

Signals are transmitted in parallel, with minimal energy.

Neuromorphic chips replicate this behavior using:

A. Silicon Neurons

Electronic circuits that simulate the behavior of biological neurons, firing spikes only when a certain threshold is crossed.

B. Artificial Synapses

Memory components that modulate the strength of connections between artificial neurons, enabling learning from experience.

C. SNNs (Spiking Neural Networks)

Unlike traditional ANNs that process continuous values, SNNs operate on discrete spikes, closely resembling real brain behavior.

D. Local Learning Rules

Algorithms such as Hebbian learning and STDP allow the system to self-organize based on data patterns — similar to human learning.

Leading Neuromorphic Chips and Platforms

Several organizations and research institutions have made significant progress in building neuromorphic hardware. Notable projects include:

A. IBM TrueNorth

A neuromorphic chip developed by IBM that contains over 1 million neurons and 256 million synapses. It is designed to perform pattern recognition tasks at extremely low power levels.

B. Intel Loihi

A research chip developed by Intel with adaptive learning capabilities and support for SNNs. Loihi aims to bring neuromorphic processing closer to real-world applications such as robotics and IoT.

C. SpiNNaker (University of Manchester)

Short for “Spiking Neural Network Architecture,” this project simulates up to a billion neurons using a custom-built digital architecture.

D. BrainScaleS (Heidelberg University)

A neuromorphic system based on analog circuits, capable of simulating neuron behavior much faster than real-time.

Applications of Neuromorphic Computing

While still in the early stages of commercialization, neuromorphic systems are poised to impact various industries and applications:

A. Edge AI and IoT

Low-power neuromorphic chips are perfect for edge devices that need to process data locally without cloud dependence — such as smart cameras, sensors, and drones.

B. Robotics

Neuromorphic hardware enables robots to react and adapt in real time, mimicking human reflexes and learning.

C. Healthcare

Used in brain-machine interfaces, prosthetics, and real-time neurological monitoring, neuromorphic chips are revolutionizing neurotechnology.

D. Cybersecurity

Neuromorphic systems can detect anomalies and intrusions in data streams through adaptive learning.

E. Smart Wearables

By enabling real-time processing with minimal energy, neuromorphic chips can be embedded in wearables for health monitoring, gesture recognition, and more.

F. Autonomous Vehicles

Neuromorphic processors can enable fast decision-making and perception in self-driving systems, complementing traditional AI accelerators.

Advantages Over Traditional Computing

Why choose neuromorphic systems over conventional CPU/GPU-based models?

A. Ultra-Low Power Consumption

Because they operate on sparse, event-driven data, neuromorphic systems can consume up to 1000x less energy than traditional processors.

B. Real-Time Processing

The asynchronous and parallel nature of neuromorphic hardware enables real-time data processing, essential for dynamic environments.

C. Scalability

Neuromorphic architectures are inherently scalable, allowing researchers to simulate millions of neurons and synapses with ease.

D. On-Chip Learning

Some neuromorphic platforms offer on-device learning, eliminating the need for cloud-based retraining.

E. Robustness

Thanks to brain-inspired design, neuromorphic chips exhibit resilience to noise and fault-tolerant behavior, just like the human brain.

Challenges and Limitations

Despite its promise, neuromorphic computing is not without challenges:

A. Software and Tooling Gaps

Current programming tools and frameworks are primarily built for von Neumann architectures, making it hard to develop for neuromorphic platforms.

B. Lack of Standards

The field lacks unified standards and benchmarks, which slows down cross-platform development and testing.

C. Complexity of SNNs

Spiking neural networks are less understood and harder to train than traditional ANNs.

D. Integration with Existing Systems

Deploying neuromorphic hardware into legacy systems requires extensive infrastructure adaptation.

E. Limited Commercial Availability

As of now, neuromorphic chips are mostly confined to research labs and prototype projects.

The Future of Brain-Inspired Computing

The neuromorphic computing landscape is rapidly evolving. With increased funding and research, the next decade may witness significant breakthroughs, including:

A. Commercial Neuromorphic Products

From wearable AI chips to embedded processors for robots and vehicles, neuromorphic chips are moving toward mass production.

B. Hybrid Systems

Combining neuromorphic processors with CPUs, GPUs, and quantum computing elements to optimize different workloads.

C. Better Software Ecosystems

Development of user-friendly frameworks (like Intel’s Lava) will make neuromorphic computing more accessible to developers.

D. Integration with Neural Interfaces

Brain-computer interfaces (BCIs) may use neuromorphic hardware to bridge digital and biological systems.

E. Education and Training

Universities are now introducing neuromorphic modules in computer science and neuroscience programs, helping to build a skilled workforce.

Conclusion: The Brain Behind the Machine

Neuromorphic computing offers a bold vision of the future where machines not only calculate but also sense, adapt, and learn like biological organisms. While still maturing, this technology is uniquely positioned to address the limitations of conventional computing, particularly in applications requiring low power, real-time responsiveness, and adaptability.

As research continues and industry adoption grows, neuromorphic computing may well become the brain behind the next wave of intelligent devices, reshaping AI, robotics, and edge computing as we know them.